preference elicitation

Elicitation of preferences is a crucial problem in AI. In fact, many AI algorithms or techiniques aim at providing services on behalf of a user - but in most cases a clear objective or utility function is not provided upfront. While traditionally in decision theory preferences (in form of utility functions) have been acquired with long and tedious procedures, modern systems must account for the cost of preference elicitation as well as the expected benefit.

This preference bottleneck is further complicated by the limitation of individual rationality and psycologichal biases.

These topics are elaborated in my research statement.

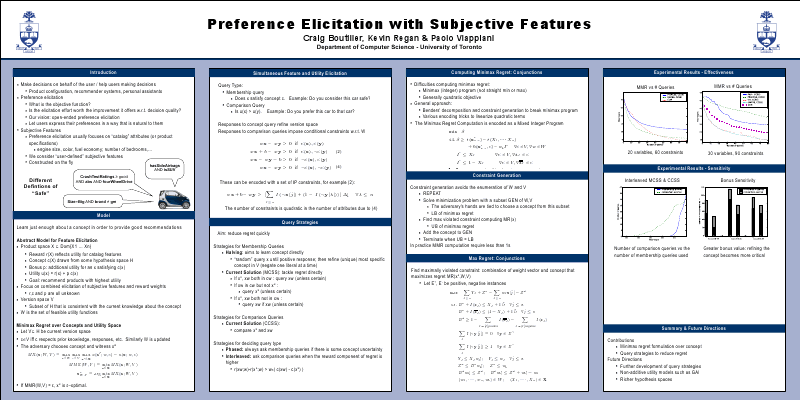

preference elicitation with subjective features

Most models of utility elicitation in decision support and interactive optimization assume a predefined set of 'catalog' features over which user preferences are expressed. However, users may differ in the features over which they are most comfortable expressing their preferences. In this project we consider the problem of feature elicitation: a utility function is expressed using features whose definitions are unknown boolean formula. We cast this as a problem of concept learning, but whose goal is to identify only enough about the concept to enable a good decision to be recommended.

- Craig Boutilier, Kevin Regan, Paolo Viappiani: Online feature elicitation in interactive optimization. ICML 2009: 10 [PDF]

optimal recommendation sets

In this project we focus on providing joint recommendations for conversational recommender systems. A theoretical result is that, by using regret as a measure, the best recommendation set (when the 'utility' of the set is the highest utility of the items in the set - however, the utility is uncertain) is also the best elicitation query set, when we consider the user is telling which item he prefers among the ones in the set (when we want to minimize the regret after having incorporated the answer).

We apply our setwise optimization strategies to dynamic critiquing, where our regret-based model outperforms the state of the art approaches.

- Paolo Viappiani, Craig Boutilier. Regret-based Optimal Recommendation Sets in Conversational Recommender Systems. ACM Recommender Systems 2009 [PDF]

human factors in preference-based search

It is well known from behavioral decision-making and psychology that humans are bad decision makers. In particular several biases interfer with our everyday decisions: for example, the framing, anchoring and prominence effect. However limited attention at these issuess have been given in computer science research.

With user studies, I showed how traditional web tools (as forms that ask the user to answer a list of questions) can often result in suboptimal choice

due to these biases.

- Paolo Viappiani, Pearl Pu, Boi Faltings: Preference-based search with adaptive recommendations. AI Communications 21(2-3): 155-175 (2008) [PDF}

- P. Viappiani, B. Faltings and P. Pu. Preference-based Search using Example-Critiquing with Suggestions. Journal of Artificial Intelligence Research (JAIR), 27, 2006, pp. 465-503. [PDF]

- P. Viappiani, B. Faltings and P. Pu. Evaluating Preference-based Search Tools: a Tale of Two Approaches. 2006. In Proceedings of the Twenty-first National Conference on Artificial Intelligence (AAAI-06), 205-211, AAAI press, Boston, MA, USA, July.

phd thesis

I have obtained my PhD in September 2007 at EPFL with a thesis titles Prefrence-based search with Suggestions under the supervision of Boi Faltings.

In this work, we have developed new mixed-initiative tools using example-critiquing and suggestions, and shown through user studies that they dramatically increase decision accuracy.